WAVe-1B-Multimodal-PT: Word-Aligned Speech Quality Assessment

Model Description

WAVe-1B-Multimodal-PT is a 1 billion parameter multimodal embedding model that assesses the quality of synthetic speech by measuring speech-transcript alignment at the word level. This model was trained on Portuguese data to identify high-quality synthetic audio suitable for ASR training.

The model combines:

- Text Encoder: XLM-RoBERTa (278M params) - multilingual text understanding

- Audio Encoder: Wav2Vec2-BERT 2.0 (581M params) - robust speech representations

- Word-Level Alignment: Multi-head attention + GLU scoring (14M params) - the core innovation

- Projection Layers: Enhanced 2-layer MLPs (10M params) - shared embedding space

Key Innovation

Unlike sentence-level approaches, WAVe uses attention-based word-level alignment to detect subtle quality issues:

- 🎯 Unnatural prosody or timing

- 🔍 Mispronunciations or synthesis errors

- ⚠️ Text-audio mismatches

- 📉 Poor audio quality

Performance Highlights

- 34% reduction in training steps for downstream ASR models

- 50% improvement in cross-domain generalization

- 30% less synthetic data needed compared to baseline filtering

- Effective detection of localized errors missed by sentence-level methods

How It Works

from transformers import AutoModel, AutoProcessor

import torch

# Load model and processor

processor = AutoProcessor.from_pretrained("yuriyvnv/WAVe-1B-Multimodal-PT")

model = AutoModel.from_pretrained("yuriyvnv/WAVe-1B-Multimodal-PT")

# Prepare inputs

text = "Olá, como você está?"

# audio = <your 16kHz mono audio array>

inputs = processor(text=text, audio=audio, sampling_rate=16000, return_tensors="pt")

# Get quality assessment

with torch.no_grad():

outputs = model(**inputs)

quality_score = outputs.quality_score.item()

# Interpret results

if quality_score >= 0.8:

print(f"✓ HIGH QUALITY ({quality_score:.3f}) - Safe for ASR training")

elif quality_score >= 0.5:

print(f"⚠ MEDIUM QUALITY ({quality_score:.3f}) - Review manually")

else:

print(f"✗ LOW QUALITY ({quality_score:.3f}) - Discard")

Model Architecture

Components

Text Encoder: XLM-RoBERTa (paraphrase-multilingual-mpnet-base-v2)

- Hidden size: 768

- Multilingual support

Audio Encoder: Wav2Vec2-BERT 2.0 (facebook/w2v-bert-2.0)

- Hidden size: 1024

- Robust speech representations

Word-Level Alignment Module:

- Multi-head attention (6 heads)

- Multi-head Gated Linear Units (4 heads)

- Learned temperature scaling

Projection Layers:

- 2-layer MLPs with expansion-compression

- Output dimension: 768

- LayerNorm + GELU activation

Training Details

- Dataset: Portuguese CommonVoice 16.1 + synthetic data

- Corruption strategies: 5 types (see paper)

- Training objective: Contrastive learning + word-level supervision

- Encoder fine-tuning: Last 3 layers unfrozen

- Optimization: AdamW with cosine scheduling

- Training epochs: 100 (best checkpoint selected at epoch 83 by similarity gap)

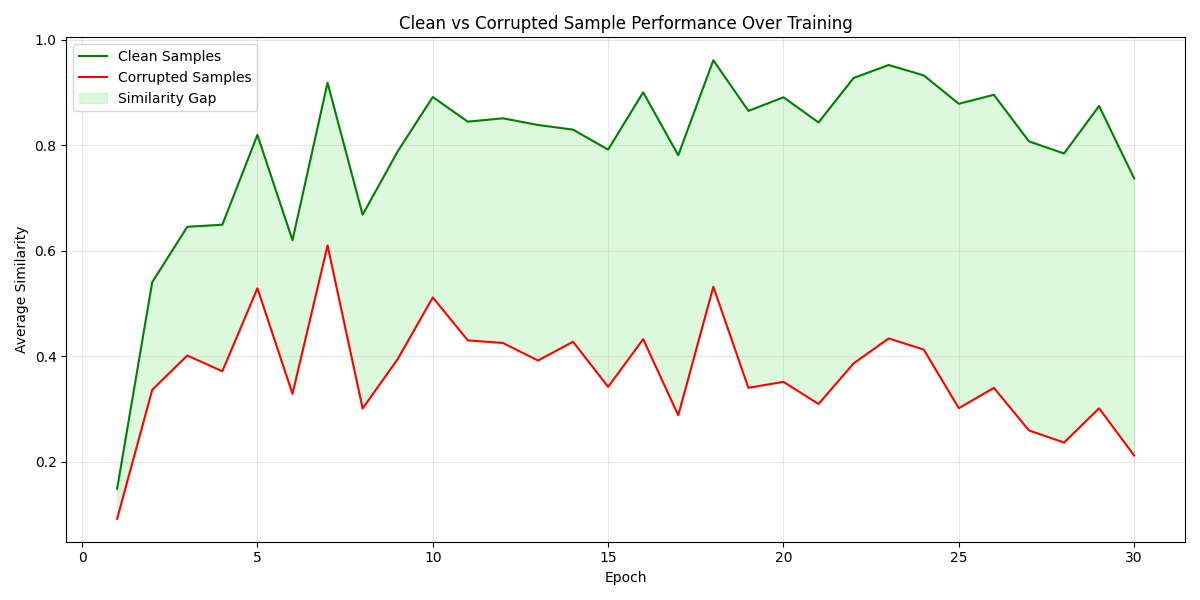

Training Metrics

The current model was trained for 100 epochs, improving substantially over the earlier 30-epoch version. Below we report the validation metrics for both checkpoints side by side.

| Metric | 30 Epochs | 100 Epochs | Change |

|---|---|---|---|

| Loss | 0.4949 | 0.2175 | -56% |

| Similarity Gap | 0.5744 | 0.5498 | -4% |

| Clean Similarity | 0.8834 | 0.7828 | |

| Corrupt Similarity | 0.3090 | 0.2330 | -25% |

| Alignment Gap | 0.0791 | 0.1181 | +49% |

The most notable improvements after extended training are the sharp drop in loss and the much wider alignment gap. A lower corrupt similarity (0.23 vs 0.31) means the model has learned to penalize mismatched audio-text pairs more aggressively. The alignment gap nearly doubled, which translates directly into better word-level error detection -- the model can now pinpoint local synthesis issues that the 30-epoch checkpoint would miss. The slight decrease in overall similarity gap comes from the model becoming more conservative with clean scores, trading off a bit of sentence-level confidence for substantially stronger fine-grained alignment.

Training Progress

30 Epochs

At 30 epochs the model already separates clean from corrupted pairs, though the gap is still narrowing and the loss plateau has not yet been reached.

100 Epochs

With continued training the model reaches a more stable equilibrium. Corrupt similarity is pushed further down and the clean distribution tightens.

Outputs

The model returns comprehensive quality metrics:

outputs.quality_score # Overall quality [0, 1] - PRIMARY METRIC

outputs.cosine_similarity # Sentence-level similarity [-1, 1]

outputs.mean_alignment_score # Average word-level alignment [0, 1]

outputs.text_embeds # Text embeddings (batch_size, 768)

outputs.audio_embeds # Audio embeddings (batch_size, 768)

outputs.alignment_scores # Per-word quality (batch_size, num_tokens)

outputs.alignment_matrix # Word-to-frame attention (batch_size, tokens, frames)

Quality Score Formula

# Step 1: Normalize cosine similarity to [0, 1]

cosine_normalized = (cosine_similarity + 1.0) / 2.0

# Step 2: Compute word-level alignment score

alignment_normalized = sigmoid(alignment_scores).mean()

# Step 3: Combine both signals

quality_score = (cosine_normalized + alignment_normalized) / 2.0

Recommended Thresholds

Based on our experiments:

| Quality Score | Label | Recommendation |

|---|---|---|

| 0.8 - 1.0 | High | ✅ Safe for ASR training |

| 0.5 - 0.8 | Medium | ⚠️ Review manually or use with caution |

| 0.0 - 0.5 | Low | ❌ Discard from training set |

Usage Examples

Batch Processing

# Process multiple samples efficiently

texts = ["Text 1", "Text 2", "Text 3"]

audios = [audio1, audio2, audio3]

inputs = processor(

text=texts,

audio=audios,

sampling_rate=16000,

padding=True,

return_tensors="pt"

)

with torch.no_grad():

outputs = model(**inputs)

quality_scores = outputs.quality_score # Shape: (3,)

# Filter high-quality samples

high_quality_indices = (quality_scores >= 0.8).nonzero()

Dataset Filtering

from datasets import load_dataset

dataset = load_dataset("your-synthetic-dataset")

filtered = []

for sample in dataset:

inputs = processor(

text=sample["text"],

audio=sample["audio"]["array"],

sampling_rate=16000,

return_tensors="pt"

)

with torch.no_grad():

quality = model(**inputs).quality_score.item()

if quality >= 0.8:

filtered.append(sample)

print(f"Kept {len(filtered)}/{len(dataset)} samples ({100*len(filtered)/len(dataset):.1f}%)")

Integration with Training Pipeline

from transformers import Trainer, TrainingArguments

def quality_filter_fn(example):

"""Filter function for HF datasets"""

inputs = processor(

text=example["text"],

audio=example["audio"]["array"],

sampling_rate=16000,

return_tensors="pt"

)

with torch.no_grad():

quality = model(**inputs).quality_score.item()

return quality >= 0.8

# Apply to dataset

filtered_dataset = raw_dataset.filter(quality_filter_fn)

# Train ASR model on filtered data

trainer = Trainer(

model=asr_model,

args=training_args,

train_dataset=filtered_dataset, # High-quality synthetic data only

...

)

Limitations

- Language-specific: Trained on Portuguese; may not generalize to other languages

- Audio format: Expects 16kHz mono audio

- Context window: Limited to sequences processable by encoders (~30 seconds)

- Computational cost: Requires ~3.3GB model size + inference compute

Citation

If you use this model, please cite:

@article{perezhohin2024wave,

title={WAVe: Word-Aligned Verification of Synthetic Speech for ASR},

author={Perezhohin, Yuriy and Castelli, Mauro},

journal={arXiv preprint},

year={2024}

}

Related Work

- Paper: [Coming soon]

- Code: https://github.com/yuriyvnv/WAVe

- Models: https://huggingface.co/yuriyvnv

Other Models in the Series

yuriyvnv/wave-dutch- Dutch language versionyuriyvnv/whisper-large-v3-portuguese-filtered- ASR trained on WAVe-filtered datayuriyvnv/whisper-large-v3-portuguese-baseline- ASR baseline without filtering

License

Apache 2.0

Acknowledgements

- Text encoder: sentence-transformers/paraphrase-multilingual-mpnet-base-v2

- Audio encoder: facebook/w2v-bert-2.0

- Training data: Mozilla CommonVoice 16.1 Portuguese

- Downloads last month

- 218

Model tree for yuriyvnv/WAVe-1B-Multimodal-PT

Base model

facebook/w2v-bert-2.0