Shanzhi-M1

🌟 Model Overview

Shanzhi-M1 is a medical LLM alignment framework developed by Shanghai Mingpin Medical Data Technology Co., Ltd. Built on the Qwen3-32B base model, it addresses core pain points of existing medical LLMs (misalignment with clinical cognition, poor adaptation to dynamic standards, high reward training costs) via three innovative designs. The framework integrates authoritative medical standards into the full training pipeline, enabling medical AI to transition from "technically feasible" to "medically trustworthy."

Core Innovations

- 3D Medical Standard System ("Dimensions-Scenarios-Disciplines"): Embeds domain standards (e.g., accuracy, compliance, empathy) into a structured matrix to guide data generation, SFT, and RL, resolving the disconnect between static evaluation and dynamic clinical needs.

- Independent Multi-Dimensional Reward Model: Decomposes medical evaluation criteria (instead of single-scalar scores) to replace high-cost real-time rubric scoring with internalized rewards, improving consistency and reducing expert labor by over 90%.

- Geometric Projection Reference Constraints: Translates medical cognitive logic (e.g., "medium answers should lie between good/poor") into mathematical regularization, ensuring scoring gradients align with clinical reasoning and enabling training on large-scale synthetic data.

Core Highlights

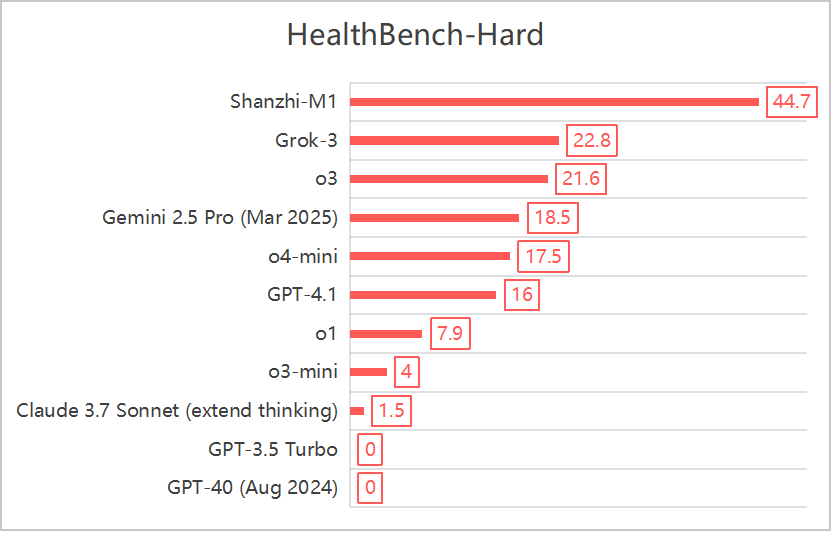

- 🏆 Top-Performing Open-Source Medical Model: Achieves 62.7 on HealthBench (Full) and 44.7 on HealthBench (Hard) — outperforming all open-source models and most closed-source counterparts (e.g., OpenAI O3, Gemini 2.5 Pro).

- 🩺 Clinical Scenario Excellence: Leads in 5 core medical scenarios (Emergency Referrals: 74.3, Communication: 69.6, Context Awareness: 52.4, etc.).

- 💰 Cost-Efficient: Compresses expert annotation labor to <1/10 of traditional methods while maintaining clinical effectiveness.

- 🔧 Standard-Extensible: Supports dynamic updates to multi-source medical guidelines (regional, disciplinary, scenario-specific).

📊 Performance Metrics

HealthBench

Scenario-Specific Performance

| Clinical Scenario | Score | Performance Note |

|---|---|---|

| Emergency Referrals | 74.3 | Highest among all tested models; prioritizes risk timeliness |

| Medical Communication | 69.6 | Excels in patient adherence guidance |

| Context Seeking | 58.5 | Strong at proactive clinical information collection |

| Global Health | 59.2 | Adapts to diverse regional medical standards |

| Context Awareness | 52.4 | Maintains consistency across multi-turn clinical conversations |

🔧 Technical Features

1. 3D Medical Standard Matrix Construction

- Variables:

L(Core Dimensions): e.g., Information Content Quality, Clinical Reasoning, Compliance.M(Scenarios): e.g., Chronic Disease Management, Pediatric Consultation, Emergency Triage.N(Disciplines): e.g., Internal Medicine, Surgery, Pharmacy, Nursing.

- Matrix Role: Guides structured data generation (questions, multi-quality answers, rubrics) to ensure coverage of all clinical scenarios.

2. Training Pipeline

- SFT Cold-Start: Fine-tunes base model with "full-dimensional optimal samples" to learn medical logic, safety, and professional expressions.

- Reward Model (RM) Training:

- Input: 5-tuple

(q, ab_i,q, ar_i,q, aw_i,q, Desc_i)(question, good/medium/poor answers, dimension description). - Loss: Combines Bradley-Terry (BT) loss (pairwise quality comparison) and Geometric Constraint (GC) loss (ensures score order aligns with medical logic).

- Input: 5-tuple

- Reinforcement Learning (RL): Uses GRPO with RM scores as rewards to optimize SFT-enhanced model, enabling continuous alignment with medical standards.

3. Efficiency Optimization

- Synthetic Data Support: Geometric constraints reduce reliance on scarce high-quality expert annotations.

- Deployment Compatibility: Supports 4-bit quantization for low-resource environments (e.g., single RTX 4090).

⚙️ Quick Start

1. Install Dependencies

pip install transformers torch vllm sglang>=0.4.6.post1

2. Load Model & Run Inference

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load model and tokenizer (replace with Hugging Face repo once released)

model_name = "mingpinDZJ/Shanzhi-M1"

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

model_name,

trust_remote_code=True,

torch_dtype="bfloat16",

device_map="auto"

)

# Example: Medical query (pediatric medication safety)

prompt = "A 3-year-old child (pediatric scenario) is taking acetaminophen for fever. Can they also take compound cold medicine? Please explain the risks and recommendations."

# Format chat input (supports multi-turn conversations)

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

thinking_mode="on" # Enables clinical reasoning logging

)

# Generate response

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=1024,

temperature=0.1, # Low temperature for clinical accuracy

top_p=0.95

)

# Parse output (separate reasoning process from final answer)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):]

try:

# Locate reasoning-end token ()

reason_end_idx = len(output_ids) - output_ids[::-1].index(tokenizer.encode("")[0])

reasoning = tokenizer.decode(output_ids[:reason_end_idx], skip_special_tokens=True)

answer = tokenizer.decode(output_ids[reason_end_idx:], skip_special_tokens=True)

except ValueError:

reasoning = "No explicit reasoning logged."

answer = tokenizer.decode(output_ids, skip_special_tokens=True)

# Print results

print(f"Clinical Reasoning: {reasoning}")

print(f"Final Answer: {answer}")

3. Efficient Deployment (SGLang/vLLM)

SGLang Server (Supports MTP Inference)

python -m sglang.launch_server \

--model-path mingpinDZJ/Shanzhi-M1 \

--reasoning-parser qwen3 \

--mem-fraction 0.9

vLLM Server (High Throughput)

vllm serve mingpinDZJ/Shanzhi-M1 \

--reasoning-parser qwen3 \

--tensor-parallel-size 1 \

--dtype bfloat16

⚠️ Usage Notices

- Medical Disclaimer: This model is for research, medical education, and clinical decision support only — it cannot replace professional diagnosis, treatment, or medical advice.

- Intended Use Cases:

- Medical student training (case simulation, knowledge verification).

- Healthcare provider decision support (second opinion, guideline alignment).

- Public health education (general health consultation).

- Safety Guidelines:

- Always validate model outputs against authoritative medical guidelines (e.g., WHO, UpToDate).

- Use under the supervision of licensed medical professionals in clinical settings.

- Data Integrity: Training data is fully independent of the HealthBench evaluation set to avoid overfitting.

📄 License

Licensed under the Apache License 2.0. Permitted for:

- Non-commercial research and education.

- Commercial use with proper attribution and compliance with medical regulations (e.g., HIPAA, GDPR for patient data).

🤝 Acknowledgements

- Base Model: Qwen3-32B (by Alibaba Cloud) for strong general language capabilities.

- Evaluation Benchmark: HealthBench (Arora et al., 2025) for clinically grounded performance testing.

- Open-Source Tools: Hugging Face Transformers, vLLM, SGLang for model deployment and inference.

- Expert Contribution: Board-certified physicians for defining 3D medical standards and validating rubrics.

📞 Contact Us

- Official Website: 大专家.COM

**Bridging AI and Clinical Practice — Making Trustworthy Medical AI Accessible**

- Downloads last month

- 12

Model tree for mingpinDZJ/Shanzhi-M1

Base model

Qwen/Qwen3-32B