Port changes from Milestone-5

Browse files- .gitignore +0 -2

- Dockerfile +15 -2

- docs/img/gui_detailed.png +3 -0

- docs/img/gui_detailed_input.png +3 -0

- docs/img/gui_ex.png +3 -0

- docs/img/gui_main_dashboard.png +3 -0

- docs/img/gui_quick_input.png +3 -0

- hopcroft_skill_classification_tool_competition/config.py +2 -2

- hopcroft_skill_classification_tool_competition/main.py +2 -0

- requirements.txt +2 -0

- scripts/start_space.sh +20 -5

.gitignore

CHANGED

|

@@ -188,5 +188,3 @@ cython_debug/

|

|

| 188 |

# PyPI configuration file

|

| 189 |

.pypirc

|

| 190 |

.github/copilot-instructions.md

|

| 191 |

-

|

| 192 |

-

docs/img/

|

|

|

|

| 188 |

# PyPI configuration file

|

| 189 |

.pypirc

|

| 190 |

.github/copilot-instructions.md

|

|

|

|

|

|

Dockerfile

CHANGED

|

@@ -10,6 +10,7 @@ ENV PYTHONDONTWRITEBYTECODE=1 \

|

|

| 10 |

# Install system dependencies

|

| 11 |

RUN apt-get update && apt-get install -y \

|

| 12 |

git \

|

|

|

|

| 13 |

&& rm -rf /var/lib/apt/lists/*

|

| 14 |

|

| 15 |

# Create a non-root user

|

|

@@ -21,12 +22,24 @@ WORKDIR /app

|

|

| 21 |

# Copy requirements first for caching

|

| 22 |

COPY requirements.txt .

|

| 23 |

|

| 24 |

-

#

|

| 25 |

-

|

|

|

|

|

|

|

| 26 |

|

| 27 |

# Copy the rest of the application

|

| 28 |

COPY --chown=user:user . .

|

| 29 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 30 |

# Make start script executable

|

| 31 |

RUN chmod +x scripts/start_space.sh

|

| 32 |

|

|

|

|

| 10 |

# Install system dependencies

|

| 11 |

RUN apt-get update && apt-get install -y \

|

| 12 |

git \

|

| 13 |

+

dos2unix \

|

| 14 |

&& rm -rf /var/lib/apt/lists/*

|

| 15 |

|

| 16 |

# Create a non-root user

|

|

|

|

| 22 |

# Copy requirements first for caching

|

| 23 |

COPY requirements.txt .

|

| 24 |

|

| 25 |

+

# Remove -e . from requirements.txt to avoid installing the project before copying it

|

| 26 |

+

# and install dependencies

|

| 27 |

+

RUN sed -i '/-e \./d' requirements.txt && \

|

| 28 |

+

pip install --no-cache-dir -r requirements.txt

|

| 29 |

|

| 30 |

# Copy the rest of the application

|

| 31 |

COPY --chown=user:user . .

|

| 32 |

|

| 33 |

+

# Ensure the user has permissions on the app directory (needed for dvc init if .dvc is missing)

|

| 34 |

+

RUN chown -R user:user /app

|

| 35 |

+

|

| 36 |

+

# Fix line endings and permissions for the start script

|

| 37 |

+

RUN dos2unix scripts/start_space.sh && \

|

| 38 |

+

chmod +x scripts/start_space.sh

|

| 39 |

+

|

| 40 |

+

# Install the project itself

|

| 41 |

+

RUN pip install --no-cache-dir .

|

| 42 |

+

|

| 43 |

# Make start script executable

|

| 44 |

RUN chmod +x scripts/start_space.sh

|

| 45 |

|

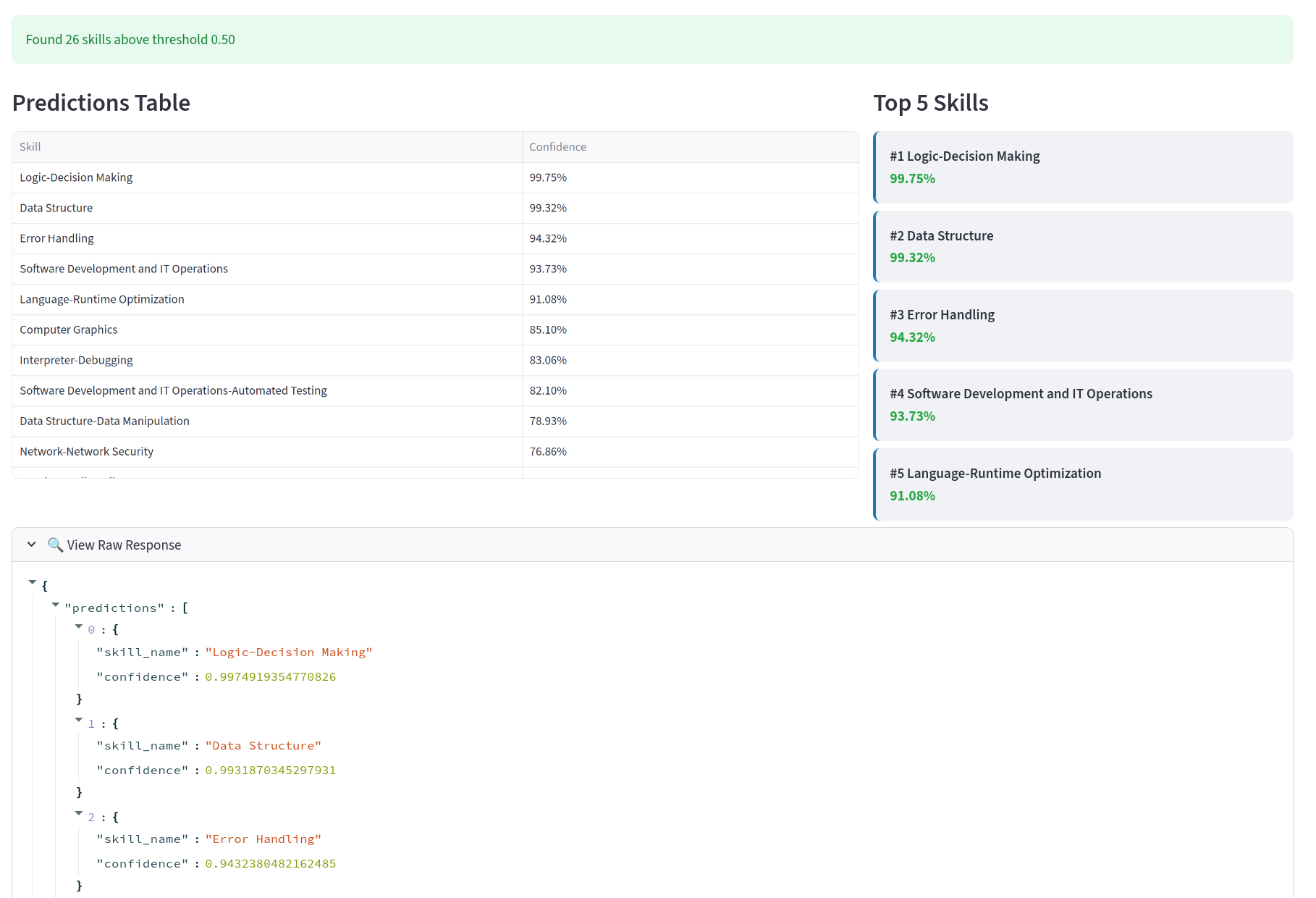

docs/img/gui_detailed.png

ADDED

|

Git LFS Details

|

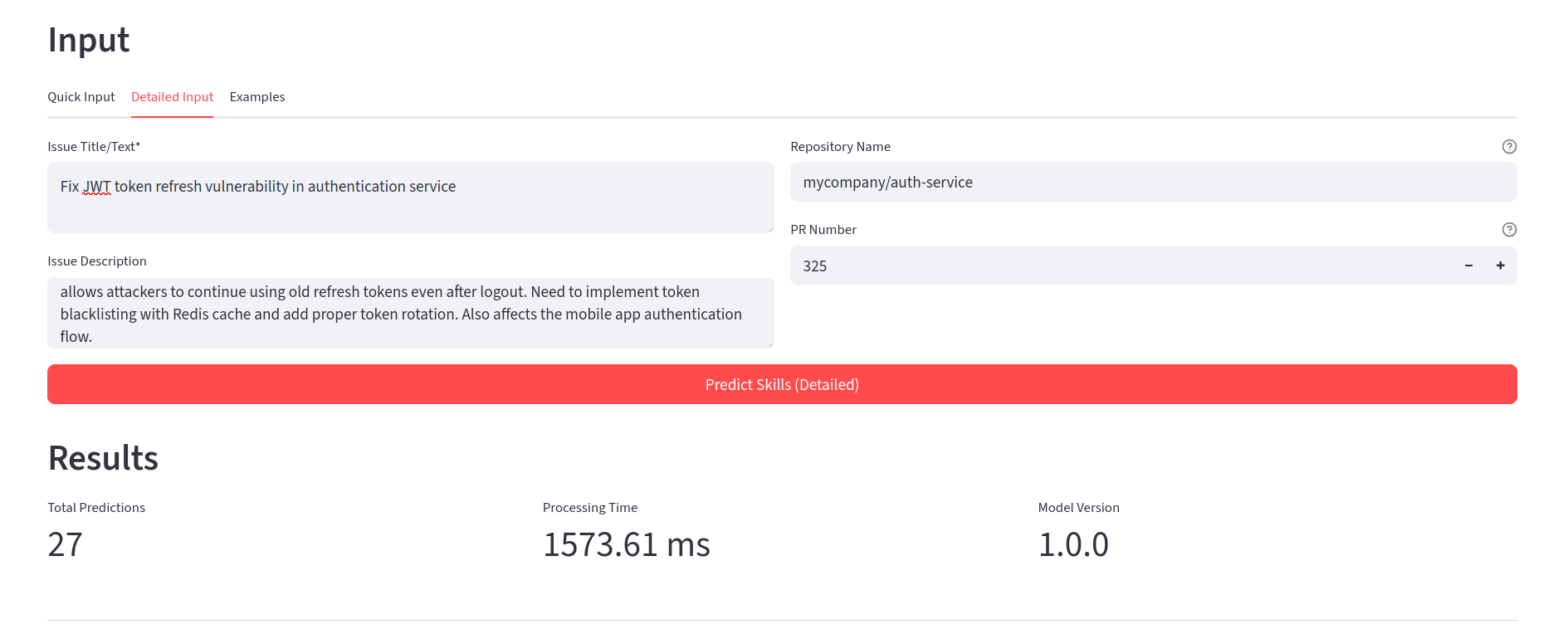

docs/img/gui_detailed_input.png

ADDED

|

Git LFS Details

|

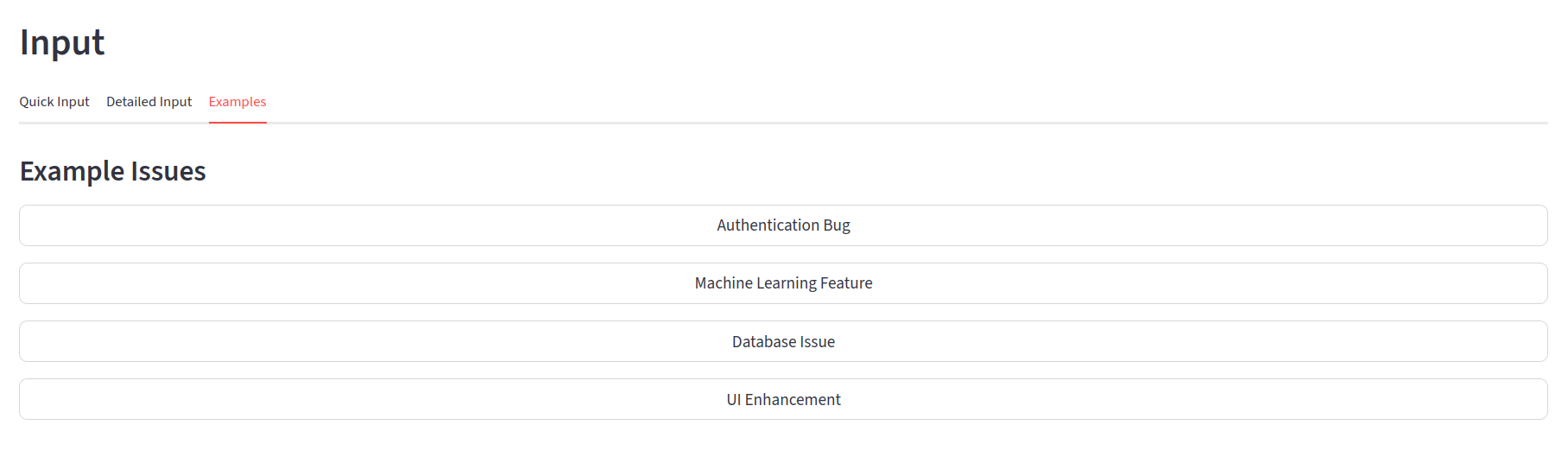

docs/img/gui_ex.png

ADDED

|

Git LFS Details

|

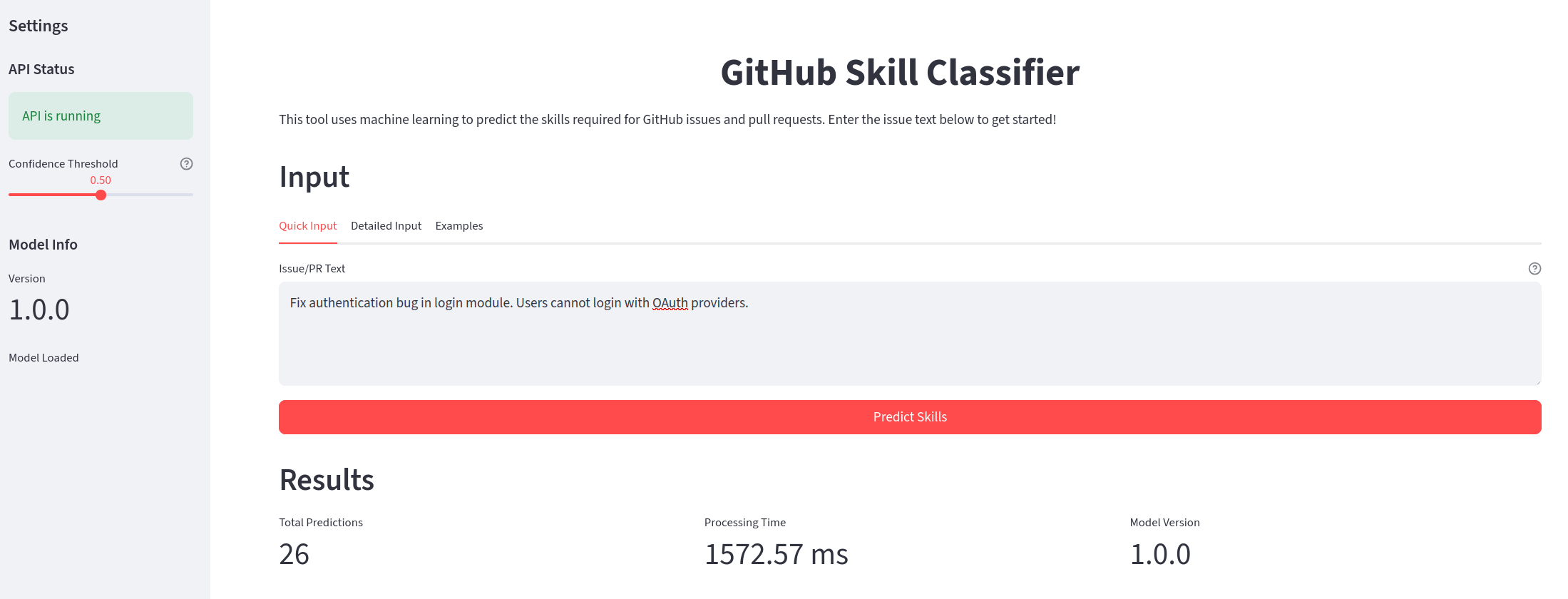

docs/img/gui_main_dashboard.png

ADDED

|

Git LFS Details

|

docs/img/gui_quick_input.png

ADDED

|

Git LFS Details

|

hopcroft_skill_classification_tool_competition/config.py

CHANGED

|

@@ -30,10 +30,10 @@ EMBEDDING_MODEL_NAME = "all-MiniLM-L6-v2"

|

|

| 30 |

# API Configuration - which model to use for predictions

|

| 31 |

API_CONFIG = {

|

| 32 |

# Model file to load (without path, just filename)

|

| 33 |

-

"model_name": "

|

| 34 |

# Feature type: "tfidf" or "embedding"

|

| 35 |

# This determines how text is transformed before prediction

|

| 36 |

-

"feature_type": "

|

| 37 |

}

|

| 38 |

|

| 39 |

# Training configuration

|

|

|

|

| 30 |

# API Configuration - which model to use for predictions

|

| 31 |

API_CONFIG = {

|

| 32 |

# Model file to load (without path, just filename)

|

| 33 |

+

"model_name": "random_forest_tfidf_gridsearch.pkl",

|

| 34 |

# Feature type: "tfidf" or "embedding"

|

| 35 |

# This determines how text is transformed before prediction

|

| 36 |

+

"feature_type": "tfidf",

|

| 37 |

}

|

| 38 |

|

| 39 |

# Training configuration

|

hopcroft_skill_classification_tool_competition/main.py

CHANGED

|

@@ -194,6 +194,8 @@ async def predict_skills(issue: IssueInput) -> PredictionRecord:

|

|

| 194 |

)

|

| 195 |

|

| 196 |

except Exception as e:

|

|

|

|

|

|

|

| 197 |

raise HTTPException(

|

| 198 |

status_code=status.HTTP_500_INTERNAL_SERVER_ERROR,

|

| 199 |

detail=f"Prediction failed: {str(e)}",

|

|

|

|

| 194 |

)

|

| 195 |

|

| 196 |

except Exception as e:

|

| 197 |

+

import traceback

|

| 198 |

+

traceback.print_exc()

|

| 199 |

raise HTTPException(

|

| 200 |

status_code=status.HTTP_500_INTERNAL_SERVER_ERROR,

|

| 201 |

detail=f"Prediction failed: {str(e)}",

|

requirements.txt

CHANGED

|

@@ -13,6 +13,7 @@ seaborn

|

|

| 13 |

|

| 14 |

# Data versioning

|

| 15 |

dvc

|

|

|

|

| 16 |

mlflow==2.16.0

|

| 17 |

protobuf==4.25.3

|

| 18 |

|

|

@@ -25,6 +26,7 @@ fastapi[standard]>=0.115.0

|

|

| 25 |

pydantic>=2.0.0

|

| 26 |

uvicorn>=0.30.0

|

| 27 |

httpx>=0.27.0

|

|

|

|

| 28 |

|

| 29 |

# Development tools

|

| 30 |

ipython

|

|

|

|

| 13 |

|

| 14 |

# Data versioning

|

| 15 |

dvc

|

| 16 |

+

dvc-s3

|

| 17 |

mlflow==2.16.0

|

| 18 |

protobuf==4.25.3

|

| 19 |

|

|

|

|

| 26 |

pydantic>=2.0.0

|

| 27 |

uvicorn>=0.30.0

|

| 28 |

httpx>=0.27.0

|

| 29 |

+

streamlit>=1.28.0

|

| 30 |

|

| 31 |

# Development tools

|

| 32 |

ipython

|

scripts/start_space.sh

CHANGED

|

@@ -3,6 +3,13 @@

|

|

| 3 |

# Fail on error

|

| 4 |

set -e

|

| 5 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

# Determine credentials

|

| 7 |

# Prefer specific DAGSHUB vars, fallback to MLFLOW vars (often the same for DagsHub)

|

| 8 |

USER=${DAGSHUB_USERNAME:-$MLFLOW_TRACKING_USERNAME}

|

|

@@ -20,9 +27,17 @@ fi

|

|

| 20 |

|

| 21 |

echo "Pulling models from DVC..."

|

| 22 |

# Pull only the necessary files for inference

|

| 23 |

-

dvc pull models/random_forest_tfidf_gridsearch.pkl \

|

| 24 |

-

models/tfidf_vectorizer.pkl \

|

| 25 |

-

models/label_names.pkl

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

-

echo "Starting

|

| 28 |

-

|

|

|

|

|

|

| 3 |

# Fail on error

|

| 4 |

set -e

|

| 5 |

|

| 6 |

+

# Ensure DVC is initialized (in case .dvc folder was not copied)

|

| 7 |

+

if [ ! -d ".dvc" ]; then

|

| 8 |

+

echo "Initializing DVC..."

|

| 9 |

+

dvc init --no-scm

|

| 10 |

+

dvc remote add -d origin https://dagshub.com/se4ai2526-uniba/Hopcroft.dvc

|

| 11 |

+

fi

|

| 12 |

+

|

| 13 |

# Determine credentials

|

| 14 |

# Prefer specific DAGSHUB vars, fallback to MLFLOW vars (often the same for DagsHub)

|

| 15 |

USER=${DAGSHUB_USERNAME:-$MLFLOW_TRACKING_USERNAME}

|

|

|

|

| 27 |

|

| 28 |

echo "Pulling models from DVC..."

|

| 29 |

# Pull only the necessary files for inference

|

| 30 |

+

dvc pull models/random_forest_tfidf_gridsearch.pkl.dvc \

|

| 31 |

+

models/tfidf_vectorizer.pkl.dvc \

|

| 32 |

+

models/label_names.pkl.dvc

|

| 33 |

+

|

| 34 |

+

echo "Starting FastAPI application in background..."

|

| 35 |

+

uvicorn hopcroft_skill_classification_tool_competition.main:app --host 0.0.0.0 --port 8000 &

|

| 36 |

+

|

| 37 |

+

# Wait for API to start

|

| 38 |

+

echo "Waiting for API to start..."

|

| 39 |

+

sleep 10

|

| 40 |

|

| 41 |

+

echo "Starting Streamlit application..."

|

| 42 |

+

export API_BASE_URL="http://localhost:8000"

|

| 43 |

+

streamlit run hopcroft_skill_classification_tool_competition/streamlit_app.py --server.port 7860 --server.address 0.0.0.0

|